Markov decision process, reinforcement learning and multi-armed bandit

讲座通知

2017年1月5日(星期四)下午 2:30

信息管理与工程学院308会议室

上海财经大学(第三教学楼西侧)

上海市杨浦区武东路100号

主题

Markov decision process, reinforcement learning and multi-armed bandit

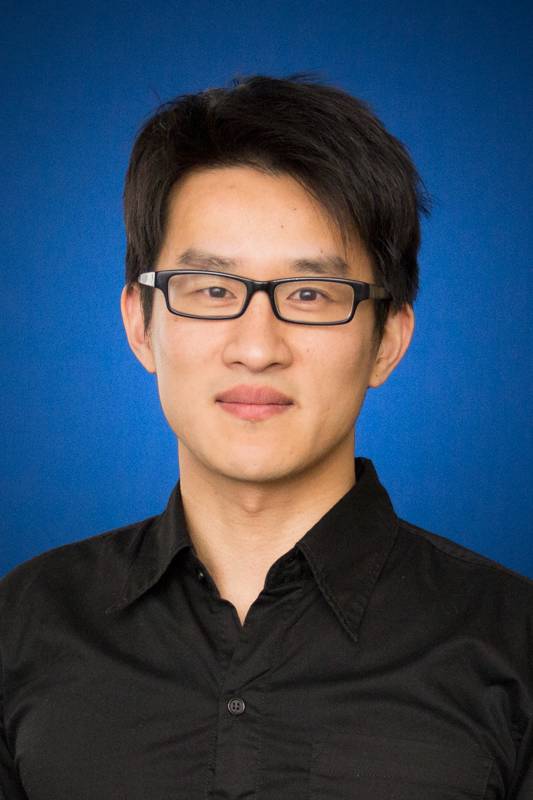

主讲人

Paul Weng

SYSU-CMU Joint Institute of Engineering

Paul Weng is currently a faculty at SYSU-CMU JIE (Joint Institute of Engineering), which is a partnership between Sun Yat-sen University (SYSU) and Carnegie Mellon University (CMU). During 2015, he was a visiting faculty at CMU. Before that, he was an associate professor in computer science at Sorbonne Universités, UPMC (Pierre and Marie Curie University), Paris. He received his Master in 2003 and his Ph.D. in 2006, both at UPMC. Before joining academia, he graduated from ENSAI (French National School in Statistics and Information Analysis) and worked as a financial quantitative analyst in London.

His main recent research work deals with (sequential) decision-making under uncertainty, multicriteria decision-making, qualitative/ordinal decision models and preference learning/elicitation.

讲座简介

Markov decision process (MDP) and reinforcement learning (RL) are general frameworks to tackle sequential decision-making problems under uncertainty. Both rely on the existence of numerical rewards to quantify the value of actions in states and on expectation to quantify the value of policies. In practice, these assumptions may not hold, as it has also been observed in some other recent work (e.g., inverse reinforcement learning, preference-based reinforcement learning, dueling bandits…). In this talk, we investigate the use of an ordinal decision criterion based on quantiles (also known as Value-at-Risk in finance). For both the MDP and the RL settings, we propose solving algorithms and present some initial experimental results. If time permits, we will also present some theoretical results in multi-armed bandits, which is a special case of RL.